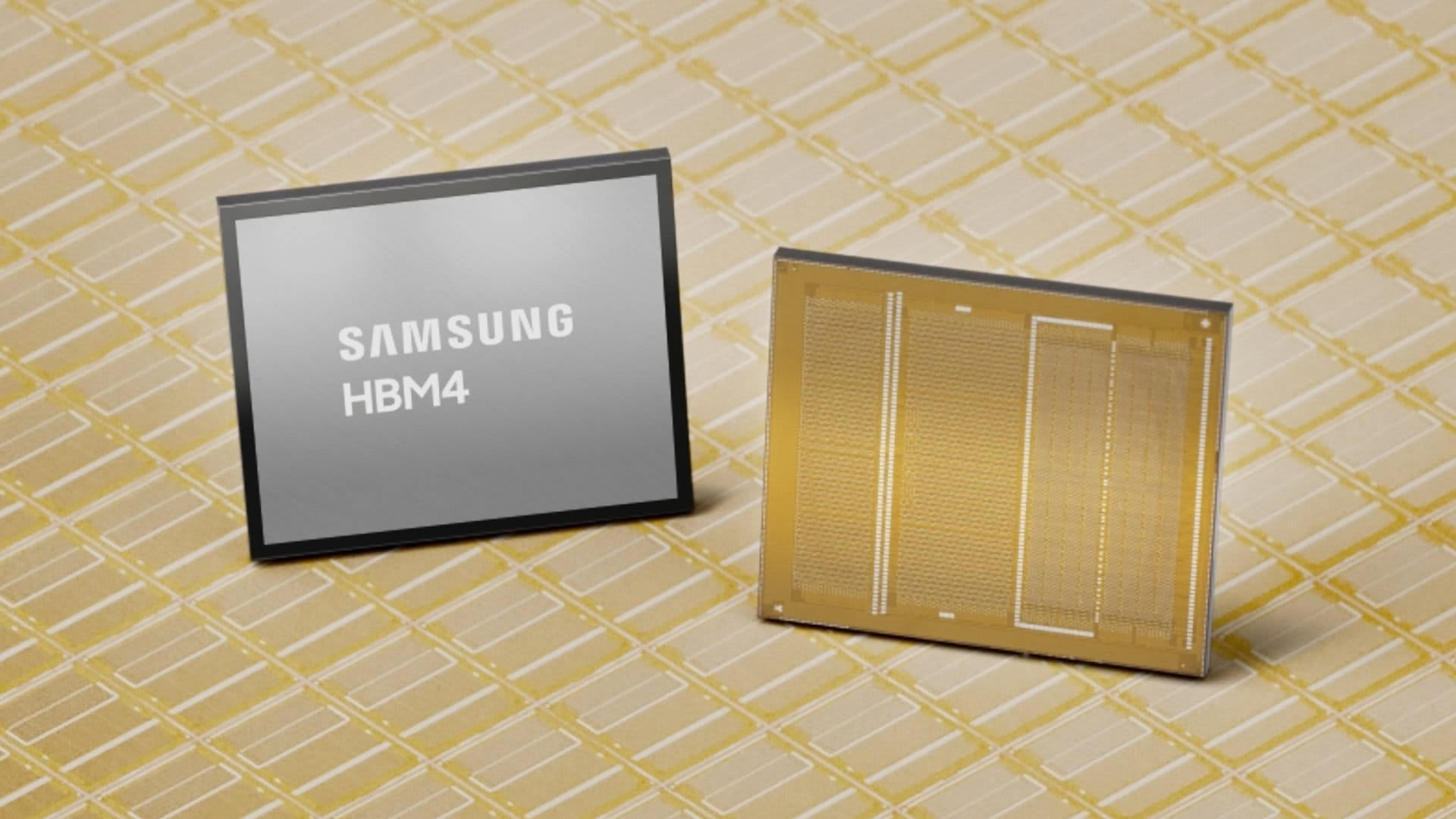

Samsung asking $700 for its HBM4 memory

Samsung has introduced its "industry-first commercial HBM4" and is asking roughly $700 per module, a rise of about 20–30% compared with last-generation HBM3E, Yonhap News notes (via Jukan).

HBM4 is designed for AI datacentre workloads, and demand for both DRAM and high-bandwidth memory has tightened supply. Nvidia’s next-generation AI "superchip" uses large amounts of HBM4, and so far only Nvidia has requested HBM4 this year while others, including Google, remain focused on securing HBM3E.

There is a strong possibility Nvidia will unveil accelerators equipped with Samsung’s HBM at GTC 2026 in March, though whether that will include the Vera Rubin six-trillion transistor "superchip" remains to be seen. Rumours also claim Rubin will be first to receive TSMC’s A16 node; GTC is slated to begin in San Jose on March 16.

HBM has not found a broad foothold in gaming. AMD experimented with it on the RX Vega cards, but that generation proved unpopular, and most gamers continue to rely on GDDR.

samsung, hbm4, hbm3e, nvidia, dram, ai datacentre, vera rubin, gtc, tsmc, gddr